In a recent post on its Research blog, Google reveals it has discovered a way to detect a person’s heart rate through ANC (active noise canceling) earbuds.

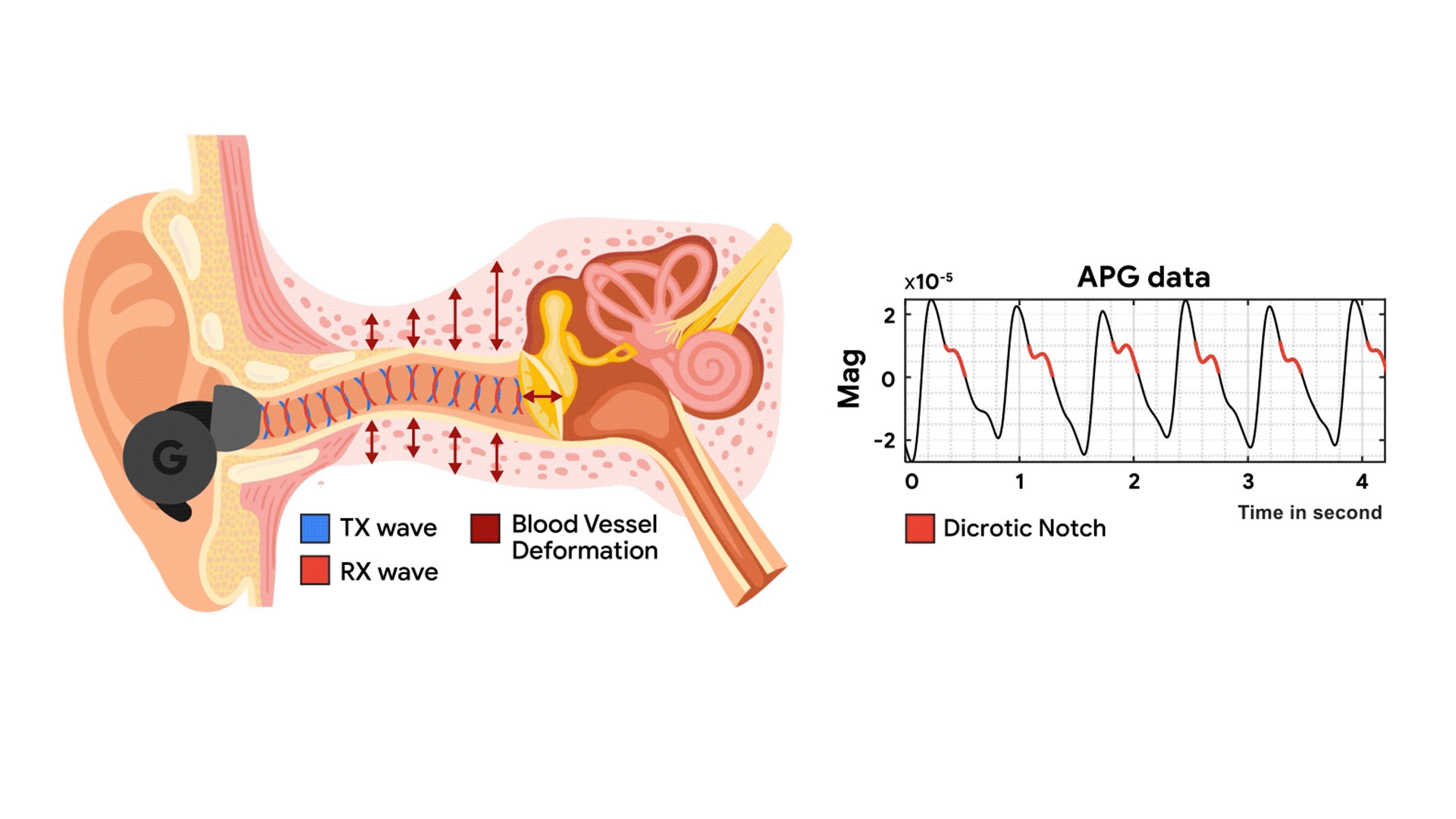

The method is called Audioplethysmography (say that three times fast), or APG for short. The way it works, according to the tech giant, is the ANC earbuds send out a “low-intensity ultrasound probing signal through” the speakers. The signal then bounces around in the ear canal, sending the echo back to be received by the “on-board feedback microphones.” The echoes are influenced by “tiny ear canal skin displacement and heartbeat vibrations”.

The company was able to detect both the heart rate within the feedback signal as well as the heart rate variability. They could tell if the heart was beating fast or slow. Google explains in its post that the ear canal is “an ideal location for health sensing” thanks to all of the blood vessels closely permeating throughout that part of the body.

Surprisingly accurate

As part of its research, Google performed two rounds of studies with 153 participants in total. Their results show the ANC earbuds were able to accurately detect heartbeats with a low margin of error of about 3.21 percent. Apparently, the devices could accomplish their task even with music playing and outside sound leaking in. What’s more, the technology isn’t impacted by different skin tones or different ear canal sizes. It all works the same.

APG isn’t perfect as it “could be heavily disturbed by” body movement which could greatly limit its implementation in future devices. However, Google remains hopeful as it believes this tech is better suited for earbuds than your standard electrocardiogram (ECG). The reason why the latter hasn’t been added to headphones is it would add “cost, weight, power consumption, [design] complexity, and form factor challenges”, preventing wide adoption.

A work in progress

Now the question is will we see APG in the next generation of Pixel Buds or any earbuds for that matter? Maybe. It certainly has a lot of potential for health-conscious users wanting to record their heart rate but may not want to commit to purchasing a Pixel Watch 2 or a fitness band. Plus, the company claims it was able to make ANC headphones support APG with a “simple software upgrade”. So what’s the hold-up?

Well, it’s still a work in progress. If you read the full paper on Google Research, the next major steps are to improve APG’s performance in rigorous exercises including, but not limited to, hiking, weightlifting, and boxing. Also, the team behind the APG hopes the findings can be used in other experiments.

Google explains ANC headphones utilize “feedback and feedforward microphones” to function. Those mics have the potential to open “new opportunities” for other applications in medicine from monitoring a person’s breathing to diagnosing ear diseases.

Until we learn more, be sure to check out TechRadar’s list of the best fitness trackers for 2023.

You might also like

via Hosting & Support

Comments

Post a Comment